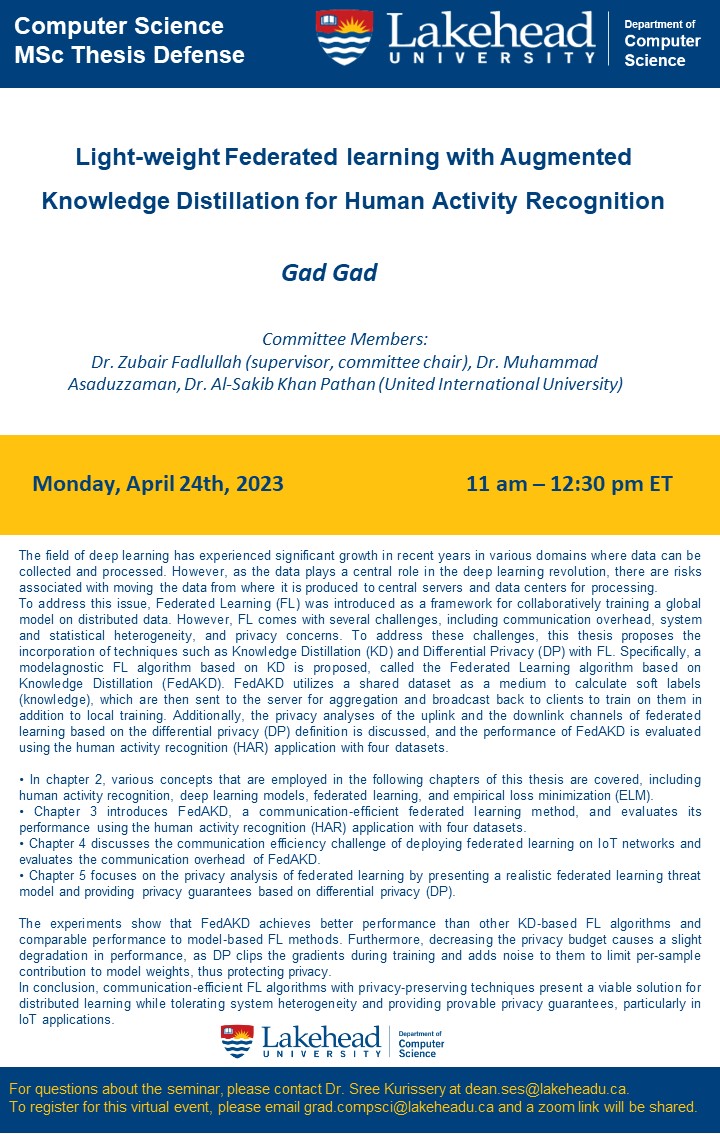

Computer Science Department Thesis Defense - Gad Gad

Please join the Computer Science Department for the upcoming thesis defense:

Presenter: Gad Gad

Thesis title: Light-weight Federated learning with Augmented Knowledge Distillation for Human Activity Recognition

Abstract: The field of deep learning has experienced significant growth in recent years in various domains where data can be collected and processed. However, as the data plays a central role in the deep learning revolution, there are risks associated with moving the data from where it is produced to central servers and data centers for processing.

To address this issue, Federated Learning (FL) was introduced as a framework for collaboratively training a global model on distributed data. However, FL comes with several challenges, including communication overhead, system and statistical heterogeneity, and privacy concerns. To address these challenges, this thesis proposes the incorporation of techniques such as Knowledge Distillation (KD) and Differential Privacy (DP) with FL. Specifically, a modelagnostic FL algorithm based on KD is proposed, called the Federated Learning algorithm based on Knowledge Distillation (FedAKD). FedAKD utilizes a shared dataset as a medium to calculate soft labels (knowledge), which are then sent to the server for aggregation and broadcast back to clients to train on them in addition to local training. Additionally, the privacy analyses of the uplink and the downlink channels of federated learning based on the differential privacy (DP) definition is discussed, and the performance of FedAKD is evaluated using the human activity recognition (HAR) application with four datasets.

• In chapter 2, various concepts that are employed in the following chapters of this thesis are covered, including human activity recognition, deep learning models, federated learning, and empirical loss minimization (ELM).

• Chapter 3 introduces FedAKD, a communication-efficient federated learning method, and evaluates its performance using the human activity recognition (HAR) application with four datasets.

• Chapter 4 discusses the communication efficiency challenge of deploying federated learning on IoT networks and evaluates the communication overhead of FedAKD.

• Chapter 5 focuses on the privacy analysis of federated learning by presenting a realistic federated learning threat model and providing privacy guarantees based on differential privacy (DP).

The experiments show that FedAKD achieves better performance than other KD-based FL algorithms and comparable performance to model-based FL methods. Furthermore, decreasing the privacy budget causes a slight degradation in performance, as DP clips the gradients during training and adds noise to them to limit per-sample contribution to model weights, thus protecting privacy.

In conclusion, communication-efficient FL algorithms with privacy-preserving techniques present a viable solution for distributed learning while tolerating system heterogeneity and providing provable privacy guarantees, particularly in IoT applications.

Committee Members:

Dr. Zubair Fadlullah (supervisor, committee chair), Dr. Muhammad Asaduzzaman, Dr. Al-Sakib Khan Pathan (United International University)

Please contact grad.compsci@lakeheadu.ca for the Zoom link.

Everyone is welcome.